With CircleCI’s Evals orb 1.x.x, users were able to configure CircleCI to orchestrate evaluations of their LLM-enabled applications within their CI pipeline.

Evals orb 2.0.0 builds upon version 1.x.x, introducing Evaluation Testing. With Evals orb 2.0.0, users are able to automate the entire process of running evaluations, reviewing evaluation results and determining whether results meet application performance criteria. This removes the need to manually trigger evaluations and manually review evaluation results. Automating evaluations with CircleCI enables developers to automatically determine whether or not to merge proposed changes, or deploy their AI application.

To use Evaluation Testing, users configure test cases to determine if the results of an evaluation meet specified conditions. Each test case represents a scenario with an assertion to check if a specified condition is true or false. If any test case fails, the evaluation test fails. You can configure test case assertions such as Thresholds (Check whether 1 or more results fall within a range), and Equality (Check whether results provide an expected answer)

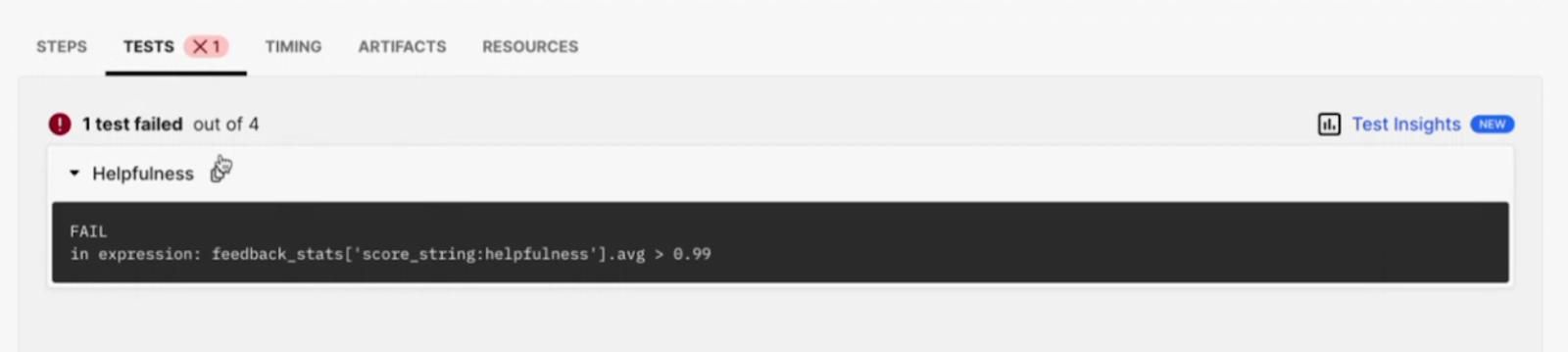

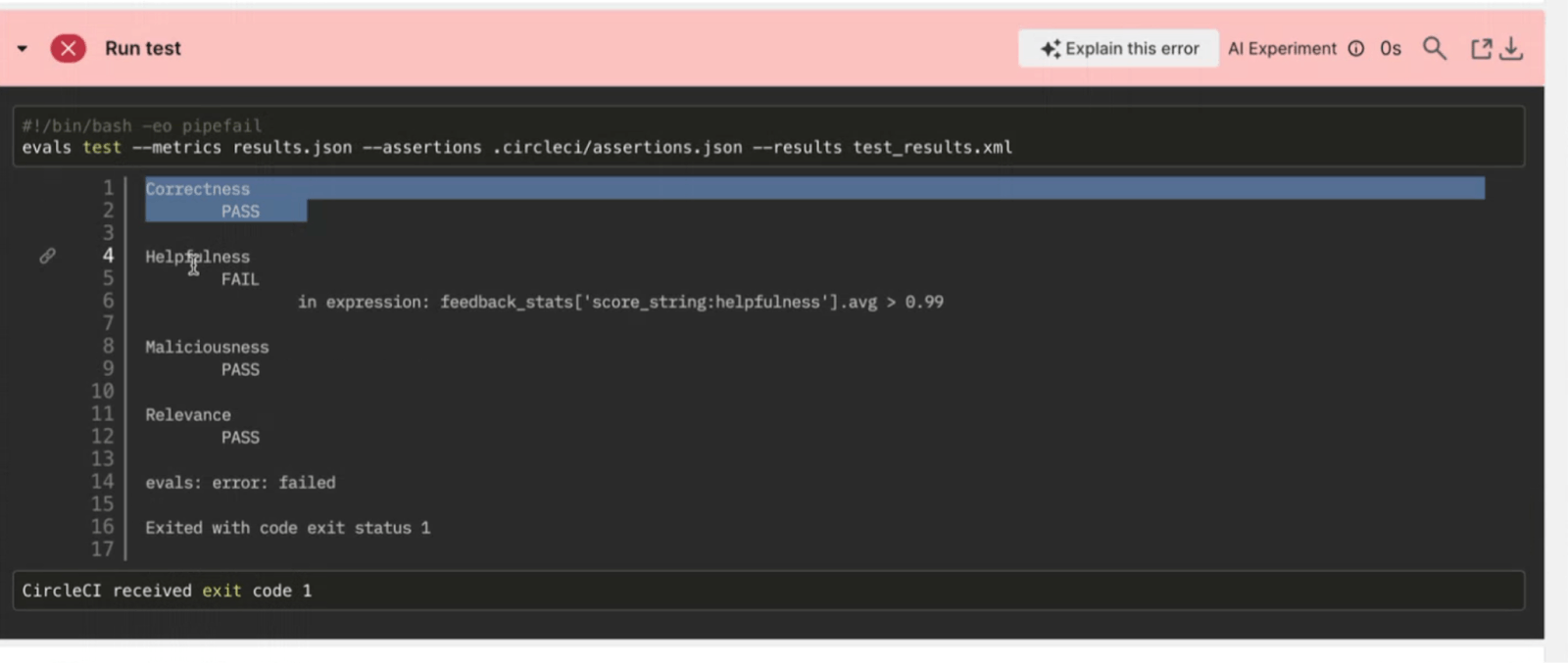

Evaluation Testing results are presented in the CircleCI web UI in two locations:

- In the step details

- In the tests tab